Host Your Own Chat LLM Interface with LibreChat

I wanted to use various LLMs at the same time as different AI tools like ChatGPT or Gemini have their own unique specialities that make them different from others; while you can use different chat interfaces, it can cause some problems like login into different models, security & personal data breaches or not having full control over these models. Then came LibreChat in the picture - a self-hosted alternative that puts the power back in your hands while staying secure and ensures privacy.

This blog is written by Akshat Virmani at KushoAI. We're building the fastest way to test your APIs. It's completely free and you can sign up here.

Let’s look at how you can use LibreChat to integrate various LLMs with a unified interface to experiment with different models like ChatGPT, Gemini, and Claude.

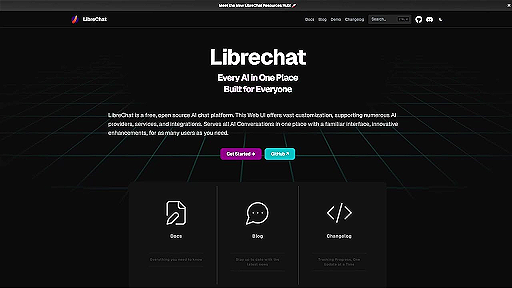

What is LibreChat?

LibreChat is a free, open-source platform that provides an amazing solution for deploying, chatting, and interacting with various AI chat interfaces, like ChatGPT and Gemini, in one place. It supports various LLM backends, including OpenAI GPT models and other compatible language models. LibreChat has good features like:

- Open-Source: It is free to use and modify.

- Backend Flexibility: Supports popular LLM APIs as well as self-hosted models.

- User-Friendly Interface: A sleek and modern chat interface designed for seamless interactions.

- Multi-Platform Support: Deployable on local machines, cloud environments, or edge devices.

- Supports multiple LLMs: LibreChat has many LLM models that you can choose from and interact with.

Why Host Your Own Chat LLM Interface?

- Cost Efficiency

Self-hosting saves costs by eliminating recurring API fees or expensive subscriptions. For example, API credits for frequent use can cost hundreds monthly, while subscriptions add up with user limits and overage fees. Instead, a one-time setup for self-hosting ensures predictable and scalable costs over time. - Customisation

LibreChat enables you to design the interface and functionality according to your requirements. You can add plugins for tasks like database queries, fetching external data, or triggering automated workflows and you can customise the UI with your brand and style. - Scalability

Self-hosting gives you the ability to scale resources based on demand. You can optimise your infrastructure for better performance. If you have domain-specific data, you can fine-tune your LLM and integrate it with LibreChat for a tailored experience.

Getting Started with LibreChat

Follow these steps to host your own chat LLM interface with LibreChat.

Step 1: System Requirements

Before starting, ensure you have the following:

- A server or local machine with sufficient resources (CPU, GPU for large models, RAM).

- Docker installed (recommended for easy setup).

Step 2: Clone the LibreChat Repository

LibreChat is hosted on GitHub. Clone the repository to your server:

git clone https://github.com/danny-avila/LibreChat.git

cd LibreChat

Step 3: Configure Your Environment

After the Docker Desktop is downloaded and running, navigate to the project repository, then Copy the contents of “.env.example” to a new file named “.env”.

This can be done by the command in Windows: “copy .env.example .env”

Step 4: Deploy Using Docker

Run the following command to deploy LibreChat using Docker:

docker compose up -d

You should now have LibreChat running locally on your machine.

Below is the screenshot of the commands running on my system:

And this is how your docker will look like when it is up and running:

Step 5: Access LibreChat

Once the deployment is complete, access the interface via your web browser using the server's IP and the specified port (e.g., http://localhost:3000).

After running on localhost, the above window will open. After login, you can use LibreChat.

Best Practices:

These are some of the best practices to follow when using LibreChat.

Documentation: Refer to the official documentation for detailed instructions and guides regarding the installation or use cases of LibreChat.

Security: Implement robust security measures when deploying LibreChat, including encrypting data in transit and using Infrastructure as Code (IaC) tools.

Deployment: Choose a suitable hosting and network service, such as Cloudflare or Docker Compose, and consider factors like ease of use, security, and affordability when deploying LibreChat.

Backup and Version Control: Regularly back up your LibreChat instance and use version control systems like Git to track changes and manage different versions of your code.

Troubleshooting

You might run into some problems while installing LibreChat; the following are some of the common troubleshooting tips:

- Docker Errors: Check logs for specific error messages, ensure Docker and its dependencies are up-to-date and running, and verify configurations.

- LibreChat Login error: If you have to log in every time you refresh your LibraChat instance on your local machine using Docker, try this out:Create a new file called “docker-compose.override.yml” in the same directory as “docker-compose.yml”. Open this new override file you created and paste the command below.

Save it and restart your LibraChat instance using “docker compose up -d” command. You can refer to this blog, for more information.

- Access Issues: Confirm correct network settings, permissions, and firewall configurations.

- Performance Slowdowns: Analyze resource usage, optimise model parameters, and check if the machine has the required specifications.

Conclusion

There you have it. In this blog, we covered everything about LibreChat and hosted it locally quickly. Using LibreChat doesn't mean you will get all the models for free; if you want to use it to its full potential, you must buy API access to these external AI services.

This blog is written by Akshat Virmani at KushoAI. We're building the fastest way to test your APIs. It's completely free and you can sign up here.

Member discussion